Perturbation theory provides a mathematical method for finding an approximate solution to a problem, by starting from the exact solution of a related problem. A critical feature of the technique is a middle step that breaks the problem into “solvable” and “perturbation” parts. Perturbation theory is applicable if the problem at hand cannot be solved exactly, but can be formulated by adding a “small” term to the mathematical description of the exactly solvable problem.

Background

Perturbation theory supports a variety of applications including Poincaré’s chaos theory and is a strong platform to deal with the dynamic behavior problems . However, the success of this method is dependent on our ability to preserve the analytical representation and solution as far as we are able to afford (conceptually and computationally). As an example, I successfully applied these methods in 1978 to create a full analytical solution for the three-body lunar problem[1].

In 1687, Isaac Newton’s work on lunar theory attempted to explain the motion of the moon under the gravitational influence of the earth and the sun (known as the three-body problem), but Newton could not account for variations in the moon’s orbit. In the mid-1700s, Lagrange and Laplace advanced the view that the constants, which describe the motion of a planet around the Sun, are perturbed by the motion of other planets and vary as a function of time. This led to further discoveries by Charles-Eugène Delaunay (1816-1872), Henri Poincaré (1854 – 1912), and more recently I used predictive computation of direct and indirect planetary perturbations on lunar motion to achieve greater accuracy and much wider representation. This discovery has paved the way for space exploration and further scientific advances including quantum mechanics.

How Perturbation Theory is Used to Solve a Dynamic Complexity Problem

The three-body problem of Sun-Moon-Earth is an eloquent expression of dynamic complexity whereby the motion of planets are perturbed by the motion of other planets and vary as a function of time. While we have not solved all the mysteries of our universe, we can predict the movement of a planetary body with great accuracy using perturbation theory.

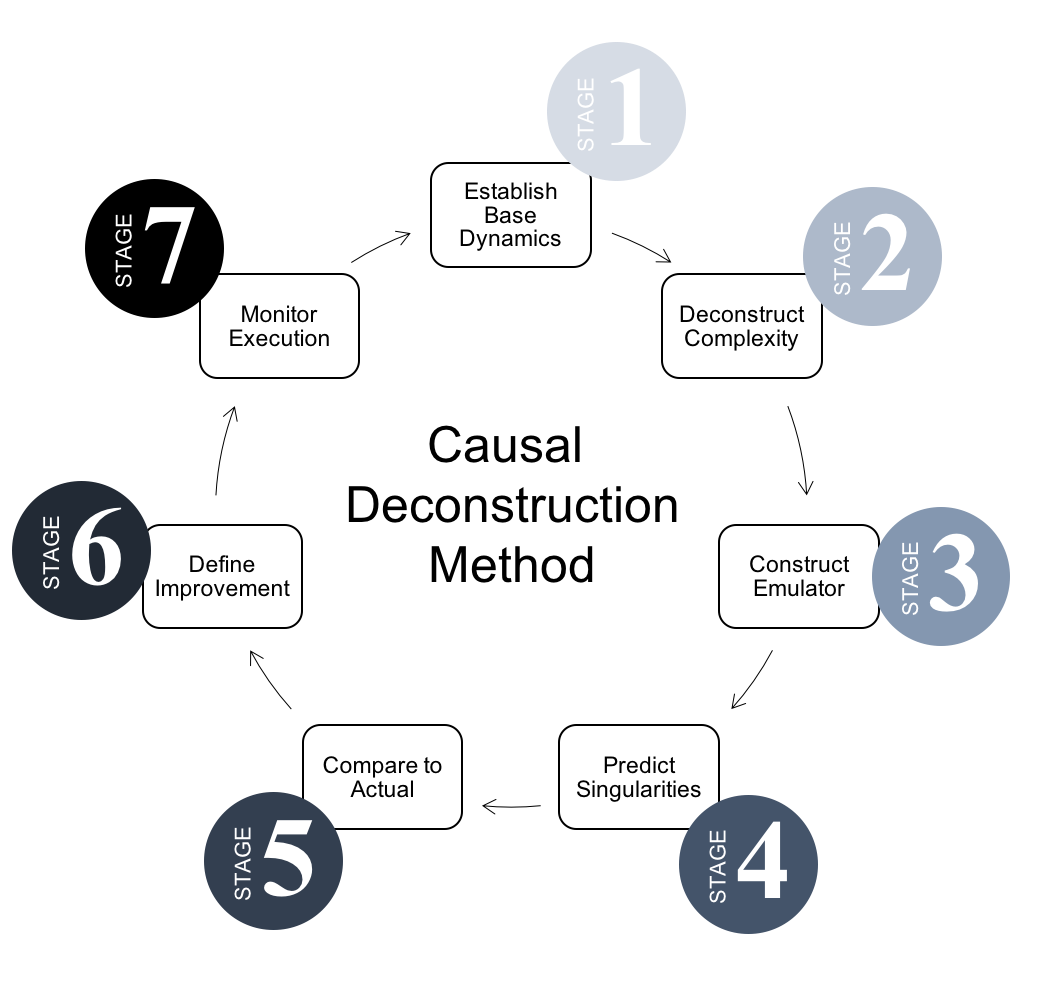

During my doctorate studies, I found that while Newton’s law is ostensibly true in a simple lab setting, its usefulness decreases as complexity increases. When trying to predict the trajectory (and coordinates at a point in time) of the three heavenly bodies, the solution must account for the fact that the gravity attracts these bodies to each other depending on their mass, distance, and direction. Their path or trajectory therefore undergoes constant minute changes in velocity and direction, which must be taken into account at every step of the calculation. I found that the problem was solvable using common celestial mechanics if you start by taking only two celestial bodies, e.g. earth and moon.

But of course the solution is not correct because the sun was omitted from the equation. So this incorrect solution is then perturbed by adding the influence of the sun. Note that the result is modified, not the problem, because there is no formula for solving a problem with three bodies. Now we are closer to reality but still far from precision, because the position and speed of the sun, which we used was not its actual position. Its actual position is calculated using the same common celestial mechanics as above but applied this time to the sun and earth, and then perturbing it by the influence of the moon, and so on until an accurate representation of the system is achieved.

Applicability to Risk Management

The notion that the future rests on more than just a whim of the gods is a revolutionary idea. A mere 350 years separate today’s risk-assessment and hedging techniques from decisions guided by superstition, blind faith, and instinct. During this time, we have made significant gains. We now augment our risk perception with empirical data and probabilistic methods to identify repeating patterns and expose potential risks, but we are still missing a critical piece of the puzzle. Inconsistencies still exist and we can only predict risk with limited success. In essence, we have matured risk management practices to the level achieved by Newton, but we cannot yet account for the variances between the predicted and actual outcomes of our risk management exercises.

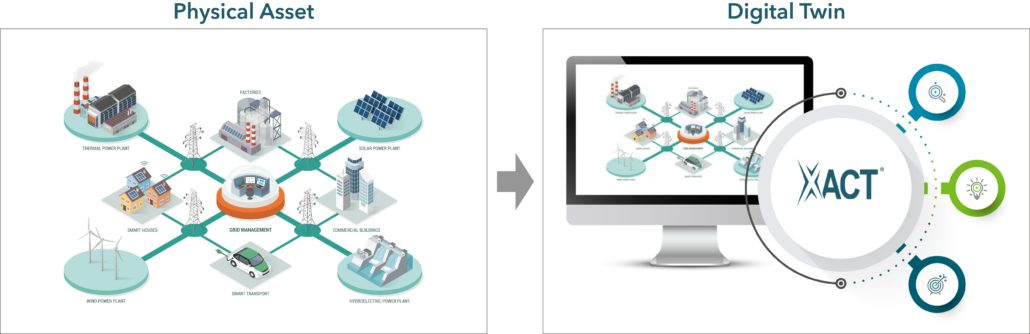

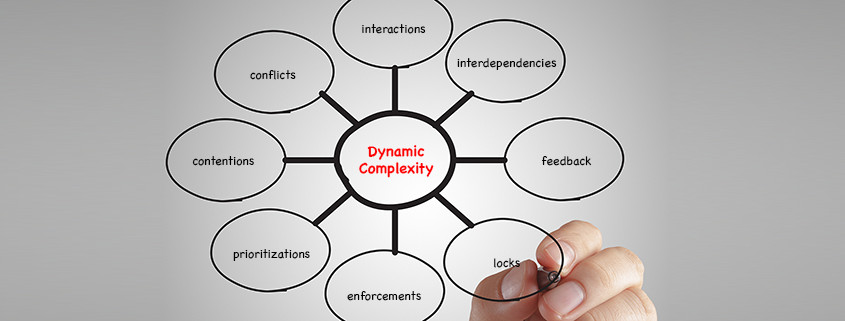

This is because most modern systems are dynamically complex—meaning system components are subject to the interactions, interdependencies, feedback, locks, conflicts, contentions, prioritizations, and enforcements of other components both internal and external to the system in the same way planets are perturbed by other planets. But capturing these influences either conceptually or in a spreadsheet is impossible, so current risk management practices pretend that systems are static and operate in a closed-loop environment. As a result, our risk management capabilities are limited to known risks within unchanging systems. And so, we remain heavily reliant on perception and intuition for the assessment and remediation of risk.

I experienced this problem first hand as the Chief Technology Officer of First Data Corporation, when I found that business and technology systems do not always exhibit predictable behaviors. Despite the company’s wealth of experience, mature risk management practices and deep domain expertise, sometimes we would be caught off guard by an unexpected risk or sudden decrease in system performance. And so I began to wonder if the hidden effects which made the prediction of satellite orbits difficult, also created challenges in the predictable management of a business. Through my research and experiences, I found that the mathematical solution provided by perturbation theory was universally applicable to any dynamically complex system—including business and IT systems.

Applying Perturbation Theory to Solve Risk Management Problems

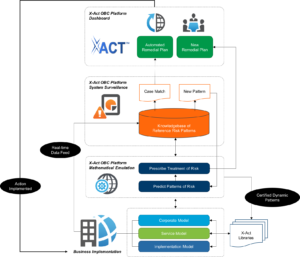

Without the ability to identify and assess the weight of dynamic complexity as a contributing factor to risk, uncertainty remains inherent in current risk management and prediction methods. When applied to prediction, probability and experience will always lead to uncertainties and prohibit decision makers from achieving the optimal trade-off between risk and reward. We can escape this predicament by using the advanced methods of perturbation mathematics I discovered as computer processing power has advanced sufficiently to support my methods perturbation based emulation to efficiently and effectively expose dynamic complexity and predict its future impacts.

Emulation is used in many industries to reproduce the behavior of systems and explore unknowns. Take for instance space exploration. We cannot successfully construct and send satellites, space stations, or rovers into unexplored regions of space based merely on historical data. While the known data from past endeavors is certainly important, we must construct the data which is unknown by emulating the spacecraft and conducting sensitivity analysis. This allows us to predict the unpredicted and prepare for the unknown. While the unexpected may still happen, using emulation we will be better prepared to spot new patterns earlier and respond more appropriately to these new scenarios.

Using Perturbation Theory to Predict and Determine the Risk of Singularity

Perturbation theory seems to be the best-fit solution for providing accurate formulation of dynamic complexity that is representative of the web of dependencies and inequalities. Additionally, perturbation theory allows for predictions that correspond to variations in initial conditions and influences of intensity patterns. In a variety of scientific areas, we have successfully applied perturbation theory to make accurate predictions.

After numerous applications of perturbation theory based-mathematics, we can affirm its problem solving power. Philosophically, there exists a strong affinity between dynamic complexity and its discovery revealed through perturbation based-solutions. At the origin, we used perturbation theory to solve gravitational interactions. Then we used it to reveal interdependencies in mechanics and dynamic systems that produce dynamic complexity. We feel strongly that perturbation theory is the right foundational solution of dynamic complexity that produces a large spectrum of dynamics: gravitational, mechanical, nuclear, chemical, etc. All of them represent a dynamic complexity dilemma. All of them have an exact solution if and only if all or a majority of individual and significant inequalities are explicitly represented in the solution.

An inequality is the dynamic expression of interdependency between two components. Such dependency could be direct (e.g. explicit connection always first order) or indirect (connection through a third component that may be of any order on the base that the perturbed perturbs). As we can see, the solutions based on Newton’s work were only approximations of reality as Newton principles considered only the direct couples of interdependencies as the fundamental forces.

We have successfully applied perturbation theory across a diverse range of cases from economic, healthcare, and corporate management modeling to industry transformation and information technology optimization. In each case, we were able to determine with sufficient accuracy the singularity point—beyond which dynamic complexity would become predominant and the predictability of the system would become chaotic.

Our approach computes the three metrics of dynamic complexity and determines the component, link, or pattern that will cause a singularity. It also allows users to build scenarios to fix, optimize, or push further the singularity point. It is our ambition to demonstrate clearly that perturbation theory can be used to not only accurately represent system dynamics and predict its limit/singularity, but also to reverse engineer a situation to provide prescriptive support for risk avoidance.

More details on how we apply perturbation theory to solve risk management problems and associated case studies are provided in my book, The Tyranny of Uncertainty.

[1] Abu el Ata, Nabil. Analytical Solution the Planetary Perturbation on the Moon. Doctor of Mathematical Sciences Sorbonne Publication, France. 1978.